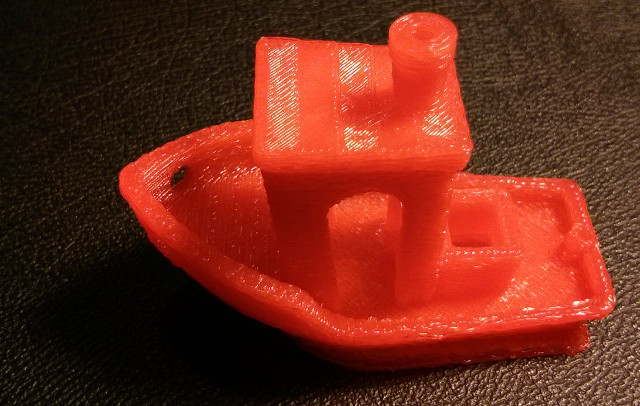

↑ TheThirdDimension

Page Created: 12/31/2015 Last Modified: 5/13/2020 Last Generated: 2/25/2026

The third dimension may just be an illusion our minds have created, for physics does not require it to exist at all↗.

Our computers can also create illusions, and I was an early proponent of VRML↗ during the 1990's virtual-reality craze, mistakenly believing that it would immediately supplant HTML. I figured, why would people browse to an organization's web page, when they could actually "walk" through an institution virtually? Our memory must have first evolved to store places, not words.

But I failed to apply what I learned about the history of mass media, that advancements in media technology do not necessarily replace older media. Radio didn't kill Gutenberg's movable type, television didn't kill radio, and the computer didn't kill television. Instead, abstract forms of information emerged, and these forms have persistence.

I first read about 3D graphics engines after I saw Carmack and Romero's Wolfenstein 3D↗ videogame around 1992, the same year of the virtual reality movie The Lawnmower Man. I had just completed Calculus III the year before, an extremely difficult class for me, essentially applying everything I learned about Calculus to 3-dimensions, but without any computer visualization tools, just manually drawing functions on paper using isometric projection. Luckily I took a mechanical drawing class when I was 14, which was years before 3D computer-aided design (CAD) software was even invented. It at least gave me a rudimentary ability to project and extrude between 2D and 3D complex shapes, but there was nothing simple about it, since adding another dimension adds another order of complexity.

Today I am still fascinated with world engines, specifically the RogueLike, the MMORPG, and Minecraft, and you can't think about creating worlds that simulate, or at least mimic, our physical world without first understanding our concept of Euclidean space. To me, roguelikes are the most elemental form, and they even simulate real-world physics computations, such as Line of Sight↗, a basic building block of the ray tracing of light.

Many roguelikes, for example, treat the world in a way that we normally perceive it, layers of flat 2D planes, as this is computationally easier for our brains, both in playing the game, and in simulating it. This works very well, since biologically, we hominids are neither birds nor fish, we are gravity-bound, surface dwellers and orient ourselves to mostly flat planes (and plains). Homo sapiens sapiens are well-equipped for these planes, with two forwarding-facing eyes with depth perception, the ability to run long distances over those planes, cooperate and use tools, and a tenacity of latching onto our prey that is not easily broken. We are the heat-seeking missiles of the animal kingdom.

Unlike other surface-dwelling animals, we don't just run around biting things, we pick things up with our two dexterous manipulators and rotate them around in front of those eyes, we break apart and recombine those things to create new things, we record or remember those new things, and we change the physical world around us.

While the illusion of space can be clearly represented by constructing an artificial medium, an engine or model of the world using physical technology or even our imagination, what we do within that medium, the story, is not so clear. To the engineer, one must construct something within known physical constraints to perform a known physical function, but to the artist, the constraints and the function lie only within our imagination, that mysterious set of ideas from which we can imagine and understand, only limited by that which lies outside of this domain; the unknown, or randomness.

At our core, we are part engineer and part artist; we are generalists. Only the recent hyper-specialization of our society, made possible through our use of language and communication, is pressuring us generalists to conform to a specialty, frequently at the cost of our individual health, perhaps an evolutionary process being orchestrated through a higher-order egregore. For millions of years, the natural environment applied evolutionary pressure on ancient hominids to create our species, but today, our artificial environment, our cities and machines, seems to be doing the same thing to us today. Man may be a very different creature one million years from now.

Most of us, if we are trying to describe a 3D object, would unwittingly break it down into 2D and 3D primitives (squares, cubes, circles, cylinders, triangles) and then describe the relative position of these primitives. Take a house, for example. To describe the stereotypical American house to someone, we might say it has a squarish bottom with a triangular roof. Centered, at the bottom of one of the square sides is a smaller, inset rectangle (the door) with a tiny protruding sphere (the doorknob).

I've been wondering why we do this instead of conveying the measurements and concluded that this is more efficient for us since it is probably how our brain stores the object in its hierarchical memory↗. We just communicate the higher-level "source code" to each other, so to speak. We must somehow store a set of rules about how to generate the object from simpler shapes. Perhaps even our memory recall of the image is "generated" in this way. If we were to try to draw this object, we first replay these rules in our head until we have reconstructed it in memory, then use our brain's visuospatial "hardware" to rotate it in our mind, before "projecting" or drawing it on a 2D piece of paper.

The widespread use of digital computer hardware beginning in the latter 20th century created a separation of certain ideas that should never have been separated in the first place. I force myself to unlearn concepts over the years when I discover they were incomplete representations. Early algorithms that interacted with the binary hardware had to give specific rules to the computer, and this still continues today with low-level software. But once the hardware had been abstracted, the algorithms could give generalized rules. Abstracting is an idea DAC↗, a Digital-to-Analog conversion, but we don't normally think of it this way. And the more we abstract, the smoother, or more analog, the idea becomes. Digital computers can create what appear to be realistic analog worlds, but only through extreme levels of abstraction.

At the lowest level, to tell a computer to draw a line, you may have to say move a byte to this place, now move a byte to this place, like assembling checkers on a grid. At a higher level, you could say, draw a line from this place to that place using the algebraic equation of a line, and all corresponding checkers line up. We call a line a geometric primitive, but in this context, the line is not the true primitive. The true primitive is the set of rules that draws the points that make up the line, a union of many-to-one. We are seeing the interconnections of language, layers and layers of rules. Sometimes we represent things symbolically (algebra), sometimes we represent them spatially (geometry), like writing versus painting. And just like a writer can describe the images in a painting, a painter can create the images of a written story. Neither are absolute, but are relatively positioned somewhere in the middle of our larger process of information abstraction.

The more complex the object, the longer it takes us to accurately reconstruct it from memory, and if the object is significantly complex, most of us will not be able to accurately reconstruct it at all.

This is because 3D is orders of magnitude more complicated than 2D. We have an optical system that processes 2D images very efficiently. The retina can receive an entire 2D image and place it directly on our brain, but we have no such system for 3D.

We have depth perception of one side of an object, but there is no such thing as human 3D vision. Without using technology, for example, we cannot see the frontside, backside, and inside of our moon simultaneously. Our brains help us out with this though, by constructing a mental image from pictures from orbiting spacecraft that we've seen, but this is not really "seeing".

You are seeing a ghost of something in the past. The 2D face of the moon as viewed directly from earth is a ghost, buffered by spacetime, being 1.3 seconds in the past. Analogously, the backside and inside of 3D objects are also ghosts, and cannot be "seen" at the same time, but only if we employ our memory of the past, those other 2D faces.

Theoretically, if the holographic principle is correct, the Universe seems to allow all 3D information to be mapped into 2D space, which is strange considering that 3D space would have the capacity of storing more information than 2D would allow.

So Superman could really have 3D vision. But even so, he would have to use more than just X-rays to see in 3D. He would have to have the ability to see reflective surfaces at different layers (but X-rays would pass through some layers and be blocked by others), and he would have to have photo-receptors so fast that they could record each layer of reflections (layers of atoms) as they were being scanned, and that is a lot of atoms.

For example, we theorize that the two most abundant elements on the earth (and the moon) are oxygen followed by silicon. If we combine 2 oxygen atoms with 1 silicon atom, we get silicon dioxide, SiO2, or quartz. Superman's fortress of solitude was made out of the most abundant materials of the earth. And if quartz is crushed into particles, we get sand.

Poetically, one could say the Earth is a giant ball of sand, or a quartz gem, or a glass sphere. But, like looking at a child's sandbox, we are only seeing the top layer, the layer that reflects light. If those SiO2 molecules are the idealized building blocks of earth, like the 3-dimensional voxels↗ in the videogame Minecraft, let's calculate their quantity.

Since we know that the radius of the earth is about 6400 kilometers, the atomic mass of Silicon is about 28.0855 grams/mole, the atomic mass of Oxygen is about 15.9994 grams/mole, and the density of SiO2 is about 2.634 grams/cubic centimeter, we can calculate the volume-to-surface-area ratio of the finest sand that would fit inside the earth.

First, let's find the molecular mass of SiO2. One silicon atom + 2 oxygen atoms = molecular mass of 28.0855 + 2(15.9994) = 60.0843 grams/mole.

Since a mole of molecules is equal to the Avogadro constant (6.0221 × 1023), we can now find the number of molecules in one gram of SiO2:

6.0221 × 1023 molecules / 60.0843 grams = 1.002 × 1022 molecules/gram

Since we know approximately how many grams of SiO2 fit into a cubic centimeter (the density), we can now find out how many individual molecules fit into a cubic centimeter:

1.002 × 1022 molecules/gram x 2.634 grams/cubic centimeter = 2.640 × 1022 molecules/cubic centimeter.

Now if we take the cubed root of this, we can find the number of molecules that fit across a 1-dimensional length of 1 centimeter.

This is approximately 2.97762 × 107 molecules/centimeter.

Then we multiply this by 100,000 to get the number of molecules per kilometer, and finally multiply it by the earth's radius of approximately 6400 kilometers to find the approximate number of SiO2 molecules that would fit along the earth's radius.

This is 1.9056768 × 1016 molecules.

Now we take the equation for the volume of a sphere (4/3 Π r3) and divide it by the equation for the surface area of a sphere (4 Π r2), using the number of molecules for the radius. This finds the volume-to-surface-area ratio of these molecules, which shows the number of times more molecules in the volume than in the surface area.

This is approximately 505,496,471,092,575.

What does this mean?

We live in an age of space-based geographic imaging, yet visible light only shows us the surface atoms of the earth. If we were to peel away the layers like a CT scanner, an X-ray that revolves around an axis, we would see around 500 trillion times more information than on the surface.

Is this information important? We don't know, since, after millions of years of evolution, we have only observed 1 out of 500 trillion different "earths". We do know that much of the ancient history of life (paleontology) and civilization (archeology) and the earth (geology) has been found there.

Of course, this is just an idealized version of the earth. The real earth is even more interesting and contains more than just sand; the real earth is influenced by gravitational pressure, heat, motion, and energy, changing the nature of the matter inside it.

But you get the general idea. The point is that 3D is massively complicated for us. As surface dwellers, we are oblivious to the layers of earth beneath us. How can one not be fascinated by a subterranean DungeonCrawl adventure?

And it is also massively complicated for computers, so, ironically, we took some of that sand and separated out some pure silicon atoms and used them to build integrated circuits for 3D GPUs (Graphical Processing Units).

At their pinnacle in the 1990's, the most advanced 2D cards were called "Windows Accelerator Cards", since Microsoft Windows was the dominant platform at that time. The first 3D videocards also appeared during that time and were expensive, and by the year 2000, when the price had dropped, I purchased my first one, an Nvidia Riva TNT2, which I later used to play my first 3D MMORPG, Dark Age of Camelot. My second one was an Nvidia GeForce 2 MX 200 which I used to play World of Warcraft. I later relied on the Intel Core i3-550 integrated graphics until I upgraded to an Nvidia GTX 750 Ti which I used to play Wurm Unlimited and also experimented with GPGPU↗ programming using OpenCL. But the 3D MMORPG was the only reason that I purchased new cards.

For decades, 3D videocards have continued to advance, but 2D was computational "solved" by the 1990's. Again, this is because we have not yet been able to build a card that can process all of the complexities of 3D in real-time. And even when we reach the state of realistic illusion, like 24-bit color did for 2D cards back in the 1990's, we won't be able to capture all physical law. Today, GPU's are incorporating hardware physics processing to try to do some of this.

Each generation of cards gets a little bit more powerful. But since our world is 3D, we cannot represent the entire world within a device that resides within our world. The silicon atoms used in the CPU are no more special than the silicon atoms that make up the earth. Our earth, being of the natural world, is already "optimally compressed" by this world.

The only ways to represent this world inside a tiny device within this world would be to either augment the real world with artificial elements (which many people are trying to do with smartphones and head-mounted displays) or somehow further compress our world by finding abstract "rules" from which to generate it, and then generate tiny windows into this artificial world.

We don't know if such rules exist, but if the world is fractal, then this may one day be possible. Maybe.

But until then, for the same reason that our most powerful satellites and supercomputers still cannot make accurate long-range weather forecasts, our most realistic procedurally-generated worlds will only be a crude approximation of some of those rules.

We can also use those rules to assemble polymer molecules out of information to create new physical objects.

The philosophical idea of an "object" is something that I write about frequently. It is a mysterious thing, shrouding our concept of information and crossing that enigmatic gap between the singular and the collective, the part and the whole.

The act of creating a physical Object and naming that collection of quarks, atoms, molecules, or mechanical parts as a single entity, a unit, is our everyday form of magic. Naming is magical, akin to an act of creation, for names abstract collections into singular ideas. There is great power in the Word.

I am already fascinated with robotics, language, and dimension, so it is no wonder that such ideas have compelled me to read about 3D printers for years. It is a device that can be imagined to take a 1-dimensional line of plastic filament and turn it into a 3-dimensional plastic object, excluding, of course, the dimension of Time.

Essentially, we are giving the Word to a robot, which, like a Christmas elf, gives us an Object, a peculiar transaction of magical gifts, at least magical in our material world.

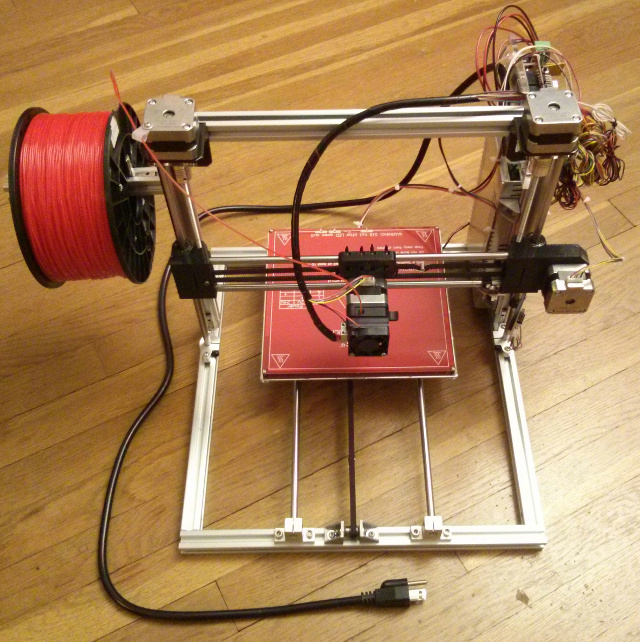

In December 2015, I built my first Cartesian↗ 3Dprinter. I asked for a kit for Christmas, that magical day of gift exchange, specifically an open design from the RepRap↗ project based on the Prusa i3↗. I chose the inexpensive 2020 Prusa i3 (Rev. A) from Folger Technologies out of New Hampshire, a design slightly modified to include an all aluminum frame.

After I opened the kit, I unpacked and sorted the parts, mainly consisting of metal beams/rods, bolts and bearings, belts, stepper motors, extruder, heated bed, wires, power supply, and control electronics. Many of the connecting parts were also 3D printed plastic parts, one of the benefits of the self-replicating RepRap designs.

It included an Arduino Mega 2560 which was my first experience with this board, based on the ATmega2560 AVR microcontroller. I have previously programmed a different AVR microcontroller (the ATtiny1634) for another project, but had never used an actual Arduino board until then. It also included a RAMPS 1.4 Arduino shield, a RepRap control board designed to allow Pololu stepper motor↗ driver boards to easily interface into the Arduino. My kit came with several A4988 driver boards.

It took me about 2 days to build, 2 days to configure the software, and about 2 days to get it properly calibrated. I run my host computer on a RaspberryPi 2 (ARM processor) using Arch Linux, so I have to do a lot of compiling/configuring and solve some dependency and compile issues. I also had to make a few modifications to the printer hardware, such as epoxying the Z-axis carriage bearings in place to prevent them from falling out. And I had to adjust the Z-axis stepper motor current to keep it from stalling. This was also my first experience with 3D slicing engines, so I had some technical reading to catch up on.

Here is the completed printer:

My first test print ever was the red #3DBenchy boat (shown at the top of this page). It was printed on PLA↗ plastic using the Marlin firmware and a Raspberry Pi 2 running Repetier-Host and Slic3r. I used an extruder temp of 219 C with no cooling fan on the part itself, and set the Z-axis resolution to .4 mm (but I print at .3 mm today).

I have used the 3D printer to create the enclosure and mechanical parts for my TrillSat craft, and have also been attempting to create physical procedurally-generated objects for use in my physical roguelike game

For my TrillSat enclosure, which had to be weatherproof, I switched from PLA to PETG, a more finicky plastic to print, but more flexible and less toxic than the popular alternative ABS, as it is similar to the plastic used in water bottles. I also switched from Repetier-Host to the command-line console Pronsole, part of the open-source Printrun↗ software suite, as it put less of a burden on the RaspberryPi.

I also taught myself OpenSCAD's modeling language. It primarily uses parametric constructive solid geometry, essentially manipulating and combining 3D geometric primitives from simple rules. Being parametric, it allows me to not only generate an object from a small set of rules, but programmatically change just a few parameters to alter the entire shape, allowing procedural generation. And OpenSCAD and Slic3r can be chained together in a Unix processing pipeline, producing files that can be easily loaded by Pronsole.

Many people design objects on 3D printers as static things using a WYSIWYG editor, then print it. But if you want to have the printer print several different things at once or print several different variations of one thing from a single algorithm, then it has to be based on variables.

OpenSCAD is not a real-time graphical editor, it is a generator, and if you've read other essays on this site, you'll know that I love generators. In the past, I built a static site generator and a roguelike world generator. Generators transform an object between the Time domain and Space. And once you do that, temporal hardware limitations (a.k.a the CPU) or spatial limitations (a.k.a. memory) are not an issue. Overcoming these technical problems leads to highly philosophical insights that go beyond technical contexts.

In language and creative contexts, I've previously referred to this as the connotation/denotation relationship. Synthetic worlds can be generated from chaotic fractals, but even those fractals, formed from dynamical systems, are generated by applying a simple, fixed rule to an iterated function or cycle.

The 3D printer project was a Fun, Fascinating, and Fantastic way of expanding my knowledge of Fused Filament Fabrication↗ (FFF). The 3D printer, being additive, can create shapes impossible for computerized milling machines and goes beyond manufacturing, crossing more easily into the imaginative realms, somewhat like the differences between stone and clay sculpting.

But it is more than that. Watching a 3D printer extrude colorful plastic to gradually build up a shape layer after layer is entrancing. You can see the G-code reveal itself; the change in speed, the arcs, the choice of how to fill the space, and the vibrations of the stepper motors have a singsong quality, more than just mathematical in character. These things are being sung into existence, as the Word becomes the Object.

Comments