↑ MorseDecoded

Page Created: 2/1/2017 Last Modified: 3/14/2021 Last Generated: 2/12/2026

SIMTHEO, A Simple Hand-Sent Morse Decoding Algorithm for Machine Recognition of Discrete Signals

by Lee Djavaherian, KD0YJM

Introduction

In 2016, I decided to create a new decoding algorithm for hand-sent International Morse Code to replace the original decoder that I created for my roguelike game on an 8-bit microcontroller, but the algorithm worked so well that I decided to publish it in case it may help fellow AmateurRadio enthusiasts or makers. I had also obtained my General class license that year, which got me thinking more about HF↗ where CW↗ is more common. Previously, I had been working with more complex packets over VHF and am currently working on a new system called TrillSat based around this old technology, and was able to invert the Morse algorithm to create a useful haptic backup communication channel for TrillSat if all of its other, more complex subsystems, failed. It allows tether thumps to send wave pulses to an accelerometer.

In 2007, the FCC removed the Morse code literacy requirement for amateur radio operators, so I didn't have to take any code tests to pass my exams, but that didn't diminish my interest. Since Morse code had its beginnings in the 1830's with the electromechanical wired telegraph, it would not surprise me if someone had already invented such an algorithm over the following two centuries, and that I just "re-invented" it. I simply designed it around the limitations of a tiny digital microcontroller, keeping the computational burden low and using as little memory as possible, but this could have been performed in some manner in early analog "printing telegraph" (teleprinter) circuits, as the most significant calculations are simple size comparisons or ratios to find the absolute or relative timing lengths of discrete pulses within a signal, not unlike the electromechanical switching done by early telephone exchanges for rotary "pulse dial" or switch-hook dialed telephones. If you want to get right to it, click here to see the algorithm, otherwise keep reading and I'll ferry your boat into a more scenic tributary...

The Design

In my game I explored several different bases for numeral systems and for months was as close as humanly possible to the singular "bit", the atom of information that cannot be separated, but only hidden or combined. At this level it becomes apparent that all of our higher representations are arbitrary collections of these discrete atoms, systems designed for and only useful to a collective. What we know of as higher mathematics, statistics, and language are relics of a collective world, and do not and cannot exist here. Calculus and statistics, for example, decompose to simple byte arithmetic. All qualitative things decompose to quantitative, a reductio ad absurdum that will even decompose itself, a paradox, turning our lowest and most precious tool, logic, into a wispy phantom; dividing it down to the Boolean operations, but even this will mysteriously dissolve if we go far enough, becoming neither qualitative nor quantitative, but purely subjective↗. The Turing machine, the abstract model of today's digital computer, is a tape + head, a duality. Remove either and it crumbles, slips through our fingers, and falls out of view.

For us physical beings, information must have a physical representation, and for various reasons, I chose a numeral system called "base 26", whose digits can be easily represented by the 26 letters in the English alphabet. This also works well with a subset of International Morse Code with A-Z being represented by a sequence of up to 4 "dits" or "dahs", onomatopoetic words that audibly mimic the visual "dot" and "dash", as dah intentionally takes longer to pronounce than dit, not unlike the elongated tones used in tonal languages like Cantonese. My own experimentation showed me that our auditory recognition of Morse code is better than visual, which is common knowledge for the many hams that are fluent in this ancient digital protocol.

But in 2016, Georgia Tech researchers discovered that touch, or haptic, recognition is even better↗. Again, it is likely that these discoveries are just re-discoveries of what early telegraph inventors learned while using those large, vibrating solenoids, and I took advantage of this in my TrillSat THUMP haptic system.

Morse code is quite fractal and creates a well-formed Huffman tree↗, because it was designed via optimization to be efficient (early digital compression) and because letters tend to follow a power-law (fractal) distribution known as Zipf's law. Interestingly, Zipf's law also holds for words, and it doesn't even matter what language is represented. Even dolphin communication follows Zip's law, and I have postulated for some time now that knowledge itself is scale-free (fractal).

Morse is a restricted case of ternary (base 3) code since its varying length, which can be thought of as null values, also have meaning. Even though only two elements are transmitted, the dit and dah, it is not pure binary due to this differing length, but can be converted to it through padding, which I implemented in my game. It requires 5 bits to store at least 26 letters in binary even though a Morse letter maxes out at 4 elements (not counting the null spaces). Theses elements, or symbols, aren't actually bits in this case, so keying speed, or baud rate (symbols per second), is easily confused with bps (bits per second).

My first Morse decoder was a crude attempt. I simply timed my keypresses and compared their duration to default values for a dit and dah, and if they were close to the default, it considered this a recognition. This forced me to be very mechanical in my timing, and I still made a lot of errors. So, to assist me, I had the computer echo the code back to me using its timing as a model, trying to train the user (myself) in the process. It wasn't natural or reliable, so I hastily concluded that such decoding would be too difficult and complex to incorporate on such a small device and that I would need to create an electronic keyer using two separate circuits to allow positive confirmation on the dits and dahs, which would force me to key in two different directions. Even though this was ergonomically superior (function), it bothered me, as it seemed to lack the mechanical simplicity (form) of the "straight key" used in early films.

I had read a few technical articles on Morse decoding over the years, and most of them incorporate complex mathematical analog signal analysis, such as the Fourier transformation, in addition to using context for word and sentence construction, including statistics and machine learning. This is because Morse code is typically a string of letters sent via CW, a continuous, radio-frequency sine wave, turned on and off, and so those knowledgeable in stochastic signal analysis↗ tend to see it from this perspective. But I am a systems-thinker and use deterministic/discrete methods as my tool of choice, and it looked to me like most of this was overkill for my restricted case.

Like the SETI project, an intelligent signal rides that fine line between meaning and noise. Decoding Morse is actually a primitive case of pattern-recognition and is probably close to the most primitive human pattern that can be recognized, a 1-dimensional, almost binary, pattern. And once you get into pattern recognition, you cross over into the (often opposing) fields of machine learning and statistics, not to mention metaphysics and ontology...

Statistics (the frequentist inference) can compare the frequency distribution with randomness to determine the "likeliness" that the element is a dit or a dah, but statistics needs a context much larger than available for it to be useful, which is why sample size is so important. Statistics has a subtle connection to machine learning (especially Bayesian inference) and looks for smaller patterns in larger data sets (analytical/separation). Machine learning works in the reverse--it creates larger patterns from smaller data sets (generative/combination).

This is a highly philosophical dichotomy, which hits at the core of reality, which I explore in many of my essays. Either way, our observations are not purely objective but require our involvement↗. What does it mean to understand something? Is description, the denotation, the diegesis adequate, or does the answer lie in the realization, the connotation, the mimesis? Like dit and dah, there is a mysterious duality to the Universe, with us at the center. I am becoming more certain over the years that the metaphorical path out of this duality cage is the shifting of context, but such shifting seems to require a map, or degree of freedom, that is not decipherable from within the cage, as the message, information, or signal never reaches its occupants↗.

In the case of my game, I don't have an RF signal at all, just a discrete on/off logical switch like the early days of wired telegraph. Similarly, in the haptic THUMP system, the accelerometer just creates a logic low/high (for a duration that can be configured) via its interrupt line. I don't need to find or filter a signal, but just observe it, since I know positively when a person is holding down the key. There is some rapid on-off-on transience when the physical key is very close to the electrical contact, but this only occurs when the key is changing states; this doesn't affect the logic, it just needs a simple de-bouncing routine.

So, since I know about a much larger external context (how fast people respond, which patterns are valid letters, etc), all I have to do is to generate a simple "lens" or filter to bring the pattern into focus. I just move the computational burden over to myself and then simply store the filter as a simple algorithm.

This was eerily similar to how I designed the game itself, the procedural generator performed a time-to-space conversion, creating memory patterns rather than a sophisticated algorithm. I generated the map, monsters, and items from a larger generator, outside of the tiny microcontroller, and stored the resulting "world" in the microcontroller. The smaller microcontroller code has no problems processing the game mechanics, but it has no understanding of the higher context that is being passed through it from the larger generator to the player, acting as a lens or focal point. To the microcontroller, a Lizard is an L, but to the player, a Lizard is a reptile with known behavior and properties. This information does not exist within the microcontroller, as it requires additional context. It is trapped in that metaphorical cage.

SIMTHEO (Simple International Morse THEOry)

SIMTHEO is a simple algorithm for machine recognition of individual, hand-sent letters in International Morse Code where the signals are discrete, such as a wired circuit or other channel where the presence or absence of the signal can be positively confirmed. I chose this acronym since its letters have a special significance in the code itself; they are the only 7 letters that contain homogeneous elements, all dits or all dahs, and are therefore the most difficult letters to discretely decode, as elements are distinguished by their relative, not absolute, durations. I also like that it can represent both Simple International Morse THEOry (this algorithm) and simulation theory↗, something that I have hypothesized in many of my fractal philosophy essays, that we are indeed inside the The Matrix. It is not related to the "Morse Theory" in mathematical topology, as Morse code was invented by Samuel F. B. Morse, a 19th century painter and inventor, not Marston Morse, the 20th century mathematician.

A dit has a duration of 1 unit, a dah is 3 units, the inter-element space is 1 unit, the inter-letter space is 3 units, and the inter-word space is 7 units. All units are relative, and that's really all you need to know besides memorizing the letter assignments. Some people have mapped this relative timing to an absolute speed, or WPM, which uses the word "PARIS" (50 units of time) as the length of a standard word, and some have elongated the inter-letter and inter-word spaces to make the letter patterns easier for people to audibly recognize (called Farnsworth timing).

This particular algorithm is intended to be used with a single, directly-connected SPST switch and the 26 English A-Z letters in mind, and is not intended for words or sentences, although they can be constructed with varying success. I designed it to minimize memory use and complex mathematics so that it can easily be implemented without floating point operations (integer arithmetic only) on tiny microcontrollers. It is primarily a classifier and isn't concerned much with separating a signal from the noise, but with properly interpreting that signal.

We know that International Morse Code letters A-Z contain at most 4 elements in sequence (dit and/or dah), so we can create a loop to automatically begin decoding after the 4th element is received, otherwise we must wait for a long off pause to occur if the letter has 1, 2, or 3 elements.

However, there are 30 possible combinations, but since there are only 26 letters, there are 4 combinations that are not letters and are either treated as invalid or assigned a special meaning.

Store the Morse alphabet in an array

- If using C language, it was space-efficient to use an array of size 30, in AVR program space, to store the letters in alphabetical order plus the 4 non-letters, starting with A=0, requiring only 5 bits assigned to each letter, aligned to the right side (the least significant bit). This requires only 18.75 bytes and works well with bit-shifting, since bit fields are difficult to access when using program space. Bit mask operations can be used to extract only those 5 bits, and the remaining 3 bits can be used for other purposes, similarly retrieved using shift/mask operations, saving 11.25 bytes. A binary 1 can represent a dah and 0 a dit, but every sequence needs to be padded, prepended with an extra 1 on the left, to avoid collisions, using up to 5 bits for each letter. For example, N (dah dit) is stored at array position 13 with a value of 00110 in binary (6 in decimal) and A (dit dah) is stored at position 0 with a value of 00101 (5 in decimal). Without the leading 1, the A could be confused with V (dit dit dit dah) 10001 (17 in decimal).

- If you are using a higher-level language or have a lot of memory, then you can just use an array of strings and leave out the padding. For example, an A could be

".-"and V could be"...-". - It is also helpful to store the 4 sequences that aren't letters at all and put them at the end of the array (positions 26-29).

Define some default values

- Define a default duration for a dit, and then set the default dah to 3 x dit.

- Define a dit/dah threshold ratio lower than 1/3. From my experience, somewhere around .25 works well, but making it too low makes it difficult for even a human to tell apart or reliably send, and a series of all dits or all dahs might be mistaken for mixed dits and dahs. This ratio should give some leeway if a person cannot hit the 1/3 ratio exactly, which is defined in the Morse standard.

- Define a very short de-bouncing duration that ignores on-times that are not humanly possible and thus noise (1/10th the duration of a default dit works well for me)

- Define a very long duration that is long enough to be obviously not a dah (4 times the default dah works well). This duration is used to enter a sequence (if it is a long off-pause) or to perform a reset (if it is a long on-pause). The reset clears the past dit/dah memory and resets it to the default values again.

Capture the sequence

- Store the sequence in an integer array (16-bit or larger) by timing the on-times (up to a max of 4), ignoring any on-times that are shorter than the de-bouncing threshold, and ignoring the off-times completely. Conveniently, offs don't have to be de-bounced, even though the state may fluctuate during the transition from on to off, since a logical off can never occur when a switch is engaged (unless the switch is dirty or faulty). So the very first logic off we see after a valid on is a positive confirmation of such.

- The sequence ends when either the 4th element is received or a long pause occurs after the last element.

Separate dits from dahs

- Using a second integer array, perform an insertion sort to arrange them in order of shortest on-time to longest on-time. Insertion sorts are simple to implement and ideal for small lists. This places the dits (if any) to the left side of the array and the dahs (if any) to the right side of the array.

- Determine whether the sequence is a mix of dits and dahs or all dits or dahs. To do this, get the ratio between shortest and longest on-time (simply divide the first element by the last element of the array), and if this value is greater than the threshold ratio, the sequence is a mix of dits and dahs, otherwise the sequence is of the same kind (either all dits or all dahs).

If mixed:

- Loop through each member in the sequence and find the adjacent pair with largest on-time difference between them (a simple subtraction), and then split the list at that point to delineate dits (on the left) from dahs (on the right). This simple difference gap works surprisingly well even though it is an absolute measurement and not a relative one, like a ratio. This is because, in typical usage, human error in timing is approximately the same between the dits and dahs, not relative to the size of the element. This simplifies the integer arithmetic, and if there is a case where a ratio works better than a difference, then it is usually indeterminate (unknown) for both a human and a computer without any additional context.

- Now there is enough information to go back and positively mark the dits and dahs in the original array.

- From that delineated sequence, save the value of a "typical" dit or dah for future reference for when a "same" sequence is found. If there are two dits or dahs, this value is simply the average. If there are 3 dits or dahs, this value is the median, the middle value. The median is a good way to find a typical value, as outliers↗ are ignored.

If same:

- Determine if they are all dits or all dahs by comparing the typical on-time with the old dit and dahs that were remembered from last mixed letter. If there are 2 elements, use the average for comparison, if there are 3, use the median. If there are 4 elements, use the average of the middle 2 (the average of the median pair).

- Determine if this value is closer in distance to to the last recorded dit (a simple subtraction), and if so, then all are dits, otherwise if closer to the last recorded dah, all are dahs.

Decode the sequence

- Loop through the Morse alphabet array that was stored earlier and find the sequence of elements that matches.

- If the sequence is dah-dah-dah-dah (not a letter) then automatically convert to dit-dit-dit-dit (H), but if the sequence is one of the other 3 possible combinations which are not letters and have mixed elements (dah-dah-dah-dit, dit-dah-dit-dah, or dit-dit-dah-dah), then either beep an error alert to the user or assign a special meaning to those combinations such as entering a training mode.

- If the sequence is a letter, add 65 (decimal) to its position in the array to find the actual letter, since this will match the ASCII character (since ASCII letters are also in alphabetical order).

Two-way feedback for training & error correction (optional)

- Feedback to User: Play back the sequence using audio, matching the user's dit durations, but use a dah speed of 3 x dit to maintain a perfect 1:3 ratio, ignoring the dah that was remembered from the last mixed sequence. This allows the user to hear the perfect Morse proportion at their natural keying speed so they don't veer too far from the standard.

- Feedback to Decoder: If the user finds that this sequence is incorrect, the key is held down until it resets (a long duration), setting the dit and dah duration back to the defaults, and then the user can re-enter.

The Inverted SIMTHEO SHH

In 2018, I decided to add SIMTHEO to TrillSat, my tethered, robotic radio platform for haptic communication over the tether. Haptic wave pulses through the tether could be received by the onboard accelerometer, and the craft could send a haptic response by vibrating its eccentric-mass drive motor.

However, only a single interrupt line on an LIS3DH accelerometer was available to me, and its single-click detection was designed for short acceleration spikes. Creating an acceleration duration 3 times longer (to signify a DAH) would require massive thrashing of the tether, so short pulses were the only option. But the Morse code standard is designed around pulse length. So I decided to invert the SIMTHEO algorithm to time the pauses instead of the pulse lengths--this works, but the downside is that you can no longer delineate that last element, as you don't know when that pause was supposed to end. So I made the assumption that all elements end with DIT by default unless another pulse was received a long time later (longer than a DAH), but not too long (which signifies end of input).

This works well, since only 12 letters end with DAH, but 14 end with DIT. And of those 12, 5 contain 4 elements and end in DAH (and therefore a 5th pulse immediately signifies a DAH, without waiting for that long pause). This leaves only 7 letters (MOWTAUK) that require this additional, carefully-timed pulse.

It's not strictly Morse code, but it allows the Morse alphabet to be used in less than ideal conditions and minimizes the number of pulses, which are more difficult to enter by plucking or flicking the tether. In the case of SIMTHEO SHH, I named the 4 non-letter sequences F1, F2, F3, F4 and in the THUMP system, I decided to use F1 and F2 as control codes for user input confirmation.

SIMTHEO SHH replays Morse using standard Morse (SIMTHEO) instead of inverted Morse for better human recognition. A computer-controlled motor can generate the necessary continuous wave vibrations, which aid human recognition.

A Proof of Concept Decoder

Program Description

SIMTHEO Decoder

http://greatfractal.com/MorseDecoded.html(Please note that this page was originally generated in HTML. If you are reading this as a text README file inside the source tarball, it will not contain any hyperlinks and the program lines may be truncated.)

Warning: This software is highly experimental! I created it for myself to test my TRILLSAT-1 prototype (see http://greatfractal.com/TrillSat.html for more information); it is probably full of bugs and not recommended for reliable, dependable use without significant changes and improvements--it may not follow best programming practices, either.

Also note that I have created this system for my own use and self-education only, with the hope that providing information about the project will be useful to others in some way. It might not be suitable for you, and I am not responsible for the correctness of the information and do not warrant it in any way.

The SIMTHEO Decoder is a basic C library implementation of the SIMTHEO Morse Code decoding algorithm specifically designed for the ATtiny 1634 microcontroller, but has since been ported to the Raspberry Pi 1, 2, and 3. It was originally designed for use with my A Tiny Room in a Tiny World roguelike game and THUMP (Tethered Haptics Using Morse Pulses) backup communication subsystem for my TrillSat robotic radio platform, but can be adapted to other experimental projects with the caveats mentioned above.

It simply takes a key input (usually a single microcontroller pin that is pulled to a logic 0/ground, but this can be defined) and echoes back the output (a single microcontroller pin that controls an LED, piezo element, or even multiple output pins to control the H-Bridge vibration of a haptic motor, for example). Once all elements are received, it replays the letter in Morse code at the keying speed of the user, but corrected for perfect proportion, for feedback confirmation.

It is a discrete, single letter classifier for only the 26-letter subset of International Morse Code (plus 4 non-Morse control codes) and does not contain additional input validation or line buffering, which are left to the external program to handle.

To save RAM, it stores the Morse alphabet and 4 control codes using only the last 5 bits of a 30-character array in flash memory program space (18.75 bytes).

It has two primary modes of operation: SIMTHEO, which is standard, continuous-wave style Morse Code, and SIMTHEO SHH, an inverted style of Morse Code which recognizes the pauses instead of the tonal lengths, using timing and/or final pulse delimiter to identify the last element.

Static and Dynamic Libraries

When I first published the source code to this project (version 1.0 and 1.1) under LGPL version 3.0, I intended to eventually modularize the code to allow it to compile as a dynamic library (an .so shared object file), the primary reason due to the LGPL itself--so that I could give more flexibility to the user, as the dynamic library already existing on a computer has no bearing on the project that uses it. From my understanding, without dynamic-linked libraries, the LGPL library user has to ensure (if they decide to release their code to others) that the library can be modified without breaking the program↗, a more difficult task which usually requires the providing of the object code at minimum.

At version 1.2, I then modularized my code enough to allow this to work. However for the ATtiny 1634 code, I was surprised that I could not find any information on how to do this. Dynamic libraries seem to be a function of an OS (which doesn't exist on an ATtiny 1634). The OS tools have to set up these connections dynamically. So my only option for the ATtiny was to release it as a static library, libsimtheo.a which has to be linked at compile time. For me, as copyright holder, I don't have to worry about it, but unfortunately, for you, under the LGPL 3.0, if you want to use my code in an ATtiny 1634 project that you decide to give away to others, you have to at least make available to them a binary object file of your code that can be linked to libsimtheo.a, so libsimtheo.a can then be modified without breaking the program. At least that's my interpretation of the LGPL 3.0.

But, obviously, in many situations this is overkill and not ideal for distribution of tiny 8-bit chips like an ATtiny 1634, which might not be connected to anything and has no Ethernet or wireless network abilities. Please be aware of this caveat.

But for the Raspberry Pi 1, 2, and 3, though, I was able to generate both static and dynamic libraries, which means that the libraries can be modified without requiring the code that connects to it, allowing more flexibility to the user.

I created a very basic makefile that compiles the following when "make" is run from the same directory:

The static libraries:

- libsimtheo-ATtiny1634.a

- libsimtheo-ARMv6h.a

- libsimtheo-ARMv7h.a

- libsimtheo-ARMv8.a

The dynamic libraries:

- libsimtheo-ARMv6h.so

- libsimtheo-ARMv7h.so

- libsimtheo-ARMv8.so

When using dynamic libraries, simlinks should be created. For example, for ARMv8 (64-bit Raspberry Pi 3) version 1.2:

ln -s libsimtheo-ARMv8.so.1.2 libsimtheo.so.1

ln -s libsimtheo.so.1 libsimtheo.so

Then the OS has to be able to see the new library. On Arch Linux, for example, I put my path in a file called simtheo.conf and then place this file in /etc/lib.so.conf.d and run ldconfig -v, but there are a variety of ways to do this.

Then one could use the simtheo functions and global variables (say, in a myfile.c program), by including the libsimtheo.h file and then compile it and reference the library:

gcc -mcpu=cortex-a53 -mfpu=neon-fp-armv8 -funsafe-math-optimizations -mfloat-abi=hard -std=c11 myfile.c -o myfile.o -lwiringpi -L. -lsimtheo

Then myfile.o, when executed, will connect to the dynamic library on startup.

On my system, when compiling, it first chooses the dynamic library, if found, instead of the static one unless otherwise indicated. Note above that wiringpi.so also has to be added to the parameters to allow the Pi to mimic the GPIO functions of the ATtiny. At the time of this writing, WiringPi was also licensed under LGPL V3.0 by its author.

A similar process is used for the Pi 1 and 2, but the gcc architecture parameters need to be changed.

But for the ATtiny, the only option is to statically compile:

avr-gcc -std=c11 -mmcu=attiny1634 -Os myfile.c -o myfile.o -L. -lsimtheo-ATtiny1634

Please keep in mind that this is the first C library I've ever created, primarily for my own use, and it is probably full of bugs, may not follow conventions, and may break when I release new versions. It is experimental only!

Functions

The module provides 5 functions:

uint8_t read_morse (void) - this is the primary input function that reads the keyed input, echoes the individual elements, replays the recognized letter, and returns the letter code (0-29) which can be added to 65 to obtain the ASCII value. In SIMTHEO mode, in the case of letter code 26 (----), which I call F1, it changes it to 7 (....). But in SIMTHEO SHH mode, F1 is simply returned as letter code 26, for situations where the external program needs to use it as a control code. And in standard SIMTHEO mode, 31 is used as a hold function, and 32 as a reset function, but these don't apply to SIMTHEO SHH since it doesn't recognize the pulse lengths. In both SIMTHEO and SIMTHEO SHH, the value of 30 is an error code.

void letter_to_morse (uint8_t lettercode) - this is the primary output function that takes a letter code and outputs a letter in Morse code.

uint8_t morse_to_letter (uint8_t pulses) - this function takes an 8-bit integer which stores the raw Morse element sequence (binary with padding), and returns its letter code value. This saves many times more RAM than using a char string to store the elements (Memory is precious on the ATtiny 1634, having only 1024 bytes of data SRAM). The value of 30 is considered an error code.

void morse_vibrate (uint16_t length, uint8_t delay) - this function takes a length as a 16-bit integer to use as a duration in 128 microsecond increments (maxing out at around 8 seconds), and takes an 8-bit integer to use as a period for an oscillation or vibration in millisecond increments. The delay is configured by a VIBRATE_DELAY define value and changes the frequency of the oscillation, which is used for sound (piezo elements) or haptic (motor pulses).

void insertion_sort(uint16_t A[], uint8_t positionA[]) - this function takes two 4-element arrays, main and position, and performs an insertion sort of the main array, but it retains memory of the original position of the values in the 8-bit array. The main array is 16-bit, since it stores time values, but the position array is 8-bit. It is used by the read_morse function to sort dits from dahs.

Requirements

When compiling, pre-processor directives look for the __AVR_ATtiny1634__ macro which is set by the -mmcu=attiny1634 parameter in avr-gcc. In this mode, it compiles a static library for the ATtiny 1634 only. The ATtiny 1634 requires the the avr-libc library (and also avr-gcc, avr-binutils). It uses the 16-bit timer, assumes that it is running at 8 Mhz (set in fuses and defined by F_CPU 8000000UL), and it sets the timer prescaler to 1024 (if running at 1 Mhz F_CPU 1000000UL, the prescaler can be set to 64 and the T_DIT value in the defines can be multiplied by 2 to compensate...) So it could conflict with other things that might be using the timer with a different prescaler value.

However, if this macro is not set, it assumes that compiling is occurring on a Linux OS (for the Raspberry Pi 1, 2, or 3) which does not require avr-libc but requires libc and WiringPi to emulate the original ATtiny 1634 functions. It then compiles static and dynamic libraries for the ARMv6h, ARMv7h, and ARMv8 architectures.

Defines that need to be customized

To save memory, I use defines a lot. By default, the code makes assumptions about how the morse key and LED and/or piezo element are connected, unless otherwise changed.

For the ATtiny 1634, it assumes that the LED, piezo, or haptic motor is connected to PA6. It assumes that a morse key switch of some sort is connected to PC1.

For the Raspberry Pi, it assumes that the LED, piezo, or haptic motor is connected to BCM GPIO 15. It assumes that a morse key switch of some sort is connected to BCM GPIO 23.

These can be changed by editing these defines in the libsimtheo.h file before compiling via the makefile:

KEY_UP (read key up as true)

KEY_DOWN (read key down as true)

MORSE_ON (turn on morse signal circuit)

MORSE_OFF (turn off morse signal circuit)

The code also assumes a 1 ms delay in how fast the LED, piezo, or haptic motor vibrates when turned on:

VIBRATE_DELAY (0 for no delay, 1-255 for delay in ms)

And the code assumes that it is in normal SIMTHEO mode, not the inverted SIMTHEO SHH mode by default:

SIMTHEO_MODE (0 is SIMTHEO, 1 is the inverted SIMTHEO SHH)

For example, something like this might be added if the key is on PB3 and an LED is on PB2 (assuming the ports are set correctly):

#define KEY_DOWN bit_is_clear(PINB,PB3)

#define MORSE_ON DDRB &= ~(1<<PB2)Parallel Operation

I designed the decoder so it can either be run on a single microcontroller or also run in tandem on multiple microcontrollers (or even a mixed microcontroller/Raspberry Pi chain) at the same time, with the output of one decoder daisy-chained to the input of another. All CPUs echo to the others, but do not replay the signal, and the last in the chain replays the signal but doesn't echo. The echo and replay states, as global uint8_t 8-bit unsigned integers can be turned on and off for each microcontroller:

morse_echo (0 off, 1 on)

morse_replay (0 off, 1 on)

On the ATtiny 1634, morse_echo and morse_replay are initialized to 1, but on the Raspberry Pi they are initialized to 0. These can simply be changed in your calling program. The reason I turned off the output on the Raspberry Pi by default is because I use the Pi to program the ATtiny, and don't want its output lines (which I have connected for programming and accessing it over UART) to interfere with the ATtiny. But, of course, if I want the Raspberry Pi to drive an LED, piezo, or haptic motor directly (or via a special passthrough mode I created), I would set morse_echo and morse_replay to 1.

In my TrillSat haptic system, for example, I run two ATtinys daisy-chained in parallel, with the first morse_echo = 1, morse_replay = 0, and the last in the chain that controls the haptic motor has morse_echo = 0, morse_replay = 1, so there are various cases and the morse_echo and morse_replay global variables can be set accordingly.

Pin Change Interrupt

It's a good idea to allow the external program to use an interrupt for initial key detection, since Morse pulses are short and do not tolerate delays. It's handled differently for the ATtiny and Raspberry Pi.

For the ATtiny 1634, the pin change interrupt and mask (for just that pin) should to be enabled in the calling program for that range of pins that include the keying pin, if it is not already present. This varies depending on pin. For example, for PB3, which uses PCINT11:

GIMSK |= (1<<PCIE1);PCMSK1 |= (1<<PCINT11);

You may want to enable sleep mode to allow the CPU to power down after Morse is received and a command is performed:

set_sleep_mode(SLEEP_MODE_PWR_DOWN);

PCMSK2 |= (1 << PCINT13 );

GIMSK |= (1 << PCIE2);

And then, create an ISR function for PB3, for example:

ISR(PCINT1_vect) {

PCMSK1 &= ~(1<<PCINT10) //disable just the morse key to keep it from calling itself

sei(); //this allows other interrupts (like I2C) to continue to work

uint8_t received_morse_letter;

received_morse_letter = read_morse();

//validate it

//do something

PCMSK1 |= (1<<PCINT11); //now re-enable the morse key again

//now put CPU to sleep

sleep_enable();

sleep_cpu();

sleep_disable();

cli(); // turn off global interrupts

}For me, I had other interrupts that didn't like to be delayed, so I had to use sei() to allow them to work, yet I didn't want PB3 to call itself, so I just turned off that pin temporarily while inside the ISR.

However, on the Raspberry Pi, I had to use WiringPi to emulate the ATtiny's interrupts.

wiringPiSetupGpio();

pinMode (23, INPUT);

wiringPiISR (23, INT_EDGE_FALLING, *Morse_Interrupt_Function)Then I created a function called Morse_Interrupt_Function() to handle the code, similar to the ATtiny's ISR function.

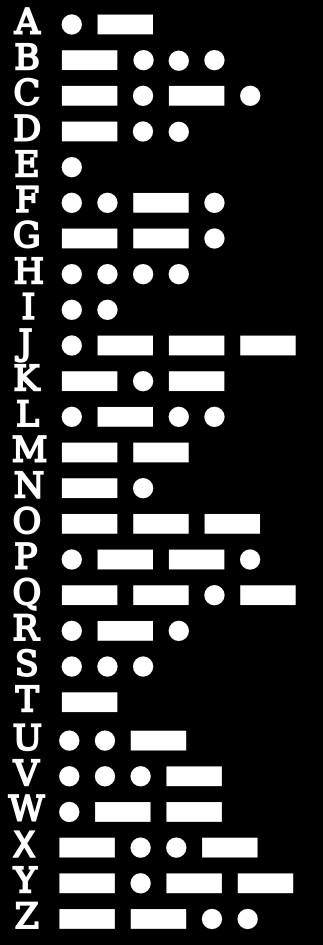

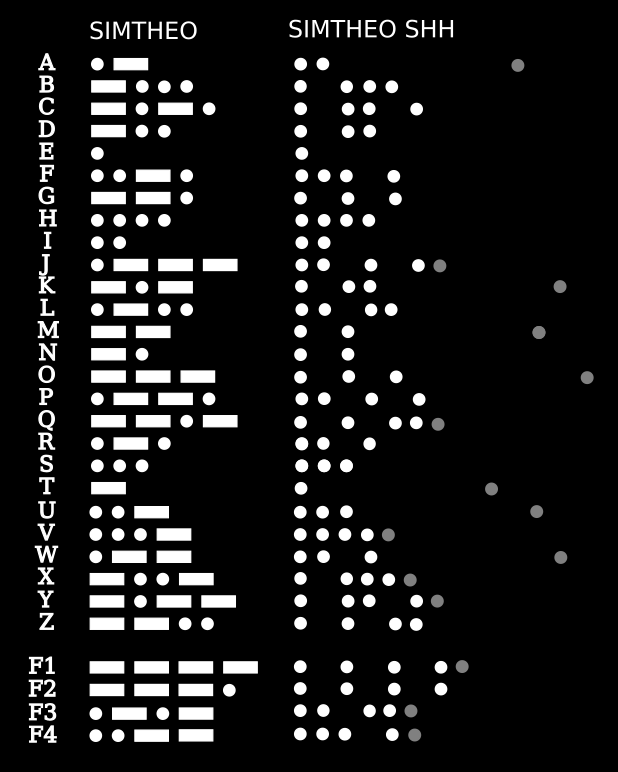

Morse alphabet

Below are the 26 Morse Code letters, and 4 control codes, that are recognized, showing traditional SIMTHEO Morse and the inverted SIMTHEO SHH DIT/pause patterns with occasional terminating pulse for ending DAHs. They are converted to binary using padding, using the 5 lowest bits only, stored in AVR program space. Anything can be stored in the highest 3 bits, since the actual values are read using a mask of 00011111 applied by an AND operation. This frees up around 11 bytes.

SIMTHEO SIMTHEO SHH

000 00101 A 0 .- .. .

000 11000 B 1 -... . . . .

000 11010 C 2 -.-. . . . .

000 01100 D 3 -.. . . .

000 00010 E 4 . .

000 10010 F 5 ..-. ... .

000 01110 G 6 --. . . .

000 10000 H 7 .... ....

000 00100 I 8 .. ..

000 10111 J 9 .--- .. . ..

000 01101 K 10 -.- . .. .

000 10100 L 11 .-.. .. ..

000 00111 M 12 -- . . .

000 00110 N 13 -. . .

000 01111 O 14 --- . . . .

000 10110 P 15 .--. .. . .

000 11101 Q 16 --.- . . ...

000 01010 R 17 .-. .. .

000 01000 S 18 ... ...

000 00011 T 19 - . .

000 01001 U 20 ..- ... .

000 10001 V 21 ...- .....

000 01011 W 22 .-- .. . .

000 11001 X 23 -..- . ....

000 11011 Y 24 -.-- . .. ..

000 11100 Z 25 --.. . . ..

000 11111 F1 26 ---- . . . ..

000 11110 F2 27 ---. . . . .

000 10101 F3 28 .-.- .. ...

000 10011 F4 29 ..-- ... ..

Disclaimer

Warning, this project is experimental and not recommended for real data or production. Do not use this software (and/or schematic, if applicable) unless you read and understand the code/schematic and know what it is doing! It was created by a human (myself) and not AI, and I made it solely for myself and am only releasing the source code in the hope that it gives people insight into the program structure and is useful in some way. It might not be suitable for you, and I am not responsible for the correctness of the information and do not warrant it in any way. Hopefully you will create a much better system and not use this one.

I run this software because it makes my life simpler and gives me philosophical insights into the world. I can tinker with the system when I need to. It probably won't make your life simpler, because it's not a robust, self-contained package. It's an interrelating system, so there are a lot of pieces that have to be running in just the right way or it will crash or error out.

There are all kinds of bugs in it, but I work around them until I later find time to fix them. Sometimes I never fix them but move on to new projects. When I build things for myself, I create structures that are beautiful to me, but I rarely perfect the details. I tend to build proof-of-concept prototypes, and when I prove that they work and are useful to me, I put them into operation to make my life simpler and show me new things about the world.

I purposely choose to not add complexity to the software but keep the complexity openly exposed in the system. I don't like closed, monolithic systems, I like smaller sets of things that inter-operate. Even a Rube Goldberg machine is easy to understand since the complexities are within plain view.

Minimalism in computing is hard to explain; you walk a fine line between not adding enough and adding too much, but there is a "zone", a small window where the human mind has enough grasp of the unique situation it is in to make a difference to human understanding. When I find these zones, I feel I must act on them, which is one of my motivating factors for taking on any personal project.

Here is an analogy: you can sit on a mountaintop and see how the tiny people below build their cities, but never meet them. You can meet the people close-up in their cities, but not see the significance of what they are building. But there is a middle ground where you can sort of see what they are doing and are close enough to them to see the importance of their journey.

The individual mind is a lens, but, like a single telescope looking at the night sky, we can either see stars that are close or stars that are much farther away, but we can't see all stars at the same time. We have to pick our stars.

I like to think of it like this:

It is not within our power to do everything, but it is within our power to do anything.

Source Code

The latest SIMTHEO Decoder source code can be downloaded here and is licensed under LGPL 3.0.

Benefits

- Only a single data line + ground or a single accelerometer interrupt line is needed for full bi-directional communication. If a normally-closed SPST switch is used, a buzzer can easily be wired as half-duplex, using time-division multiplexing for output, since modern microcontrollers can quickly change pin states.

- It allows daisy-chained, asynchronous, parallel operation on multiple CPUs using only a single microcontroller pin for the serial bus, with Morse Code even acting as its own bus protocol, with no reliance on any other protocols. This allows each CPU to process the command differently at almost the same time, allowing orchestrated operations on different subsystems, even if that CPU doesn't know what the others are doing.

- The algorithm is human-friendly and it would be trivial to have the computer randomize sets of letters to implement a training mode, even sending the letters at a higher rate. Although it cannot handle words, it would be somewhat similar to the Farnsworth method.

- There is no need for complex statistics or signal analysis. It can be easily implemented without floating point mathematics (integer operations only).

- It adjusts to the user's speed and can be very fast, allowing the user to hear the pattern at different speeds, which can improve learning.

- The bigger the difference between the dits and the dahs, the easier the detection.

- It learns this difference from the last successful mixed dit/dah combo, which is almost 3 times as likely to occur (since there are 19 mixed and only 7 that are the same if letter frequency is random). This works well for my roguelike game since I use letters discretely in my command table.

- By ignoring the off times, and by eliminating a complex learning/prediction model or data structure, it saves memory.

- Morse code is still useful even in the modern world of complex protocols, high-speed communication, and powerful computers, since it can allow humans to send and receive complex information to/from a computer over a single data line, acting as a failsafe backup channel or manual override if other input/output devices fail.

Drawbacks

- It is only a subset of International Morse Code (A-Z only), so numbers, symbols, and control signals must be spelled out using the English alphabet, analogous to in-band software flow control in modern computing.

- It is a simple algorithm designed for discrete letter recognition, not for words or sentences. For words, letters need to be separated by an off-time of the dah duration, and for sentences, words need to be separated by an off-time of 7 dit durations. This makes it difficult for any simple algorithm, since pauses or off-states between elements can be confused with off-states of letter/word boundaries. The algorithm could be extrapolated, for example, to also time the off times in an array and then separate the inter-element pauses from inter-word pauses in the same way that it separates dits from dahs, but in this case, it wouldn't just be selecting from 30 combinations. In base 26, just 15 letters allows 1,677,259,342,285,725,925,376 combinations, unless limited to valid words, but even using a dictionary still leaves hundreds of thousands of words in the English language. (This is another example of how memory in a higher context is required to bring additional information into existence. To this algorithm, everything must be a letter, but to a human being or a higher-order pattern-recognition algorithm or neural net, we see informational divisions that the lower-order entity cannot.) It has no long-term memory or complex Bayesian-inference-like structure. This can be beneficial at lower abstraction levels, though, as it prevents the system from getting "too smart" and applying an inappropriate bias↗.

- In English, letter frequency is not random, and commonly used words like the, is, it, to, me, he, she, him, his, its, most, this, and those contain no mixed Morse letters are are therefore more dependent on the user remembering the duration of the dits and dahs, which isn't difficult with a little bit of practice and computer feedback.

- It isn't designed to find a signal in the noise (as the noise is non-existent due to its direct connection/discrete logic) so it cannot simply be connected to a continuous analog audio signal such as a radio without an additional pre-processing circuit or algorithm. If this is desired, then there are more sophisticated algorithms that would be more appropriate, as alluded to above.

Insights

- I experimented with automatically changing the threshold ratio to match the user's keying ratio, but this made things worse. There is really no need to change that ratio once set, as it is defined around a fixed standard.

- I tried creating a sliding window that shifted around depending on the length of the user's dits and dahs, but this did not improve recognition. As the dits get larger than the default and the dahs get smaller, they get so close to each other that even a human has a hard time recognizing it.

- I tried allowing a successful detection of a same sequence to also change the default values, instead of only doing this with a mixed sequence, but it just caused a bias effect that grew out of bounds. Resetting this value on each mixed sequence kept things in proportion.

- The training and feedback is optional, since it will improve accuracy if used, but it will also slow down the process by echoing everything back to the user. It allows the user to clear and retrain the system periodically which is useful when one changes their own patterns or speed (or if a different user takes over). I noticed that when I didn't have the auditory feedback I sometimes perceived that I was keying dahs when I was really just keying a series of dits, like a type of tactile beat deafness↗. This disappears quickly when hearing the correct rhythm again.

- When adjusting the feedback (playback) speed to the dah instead of the dit, it seemed too long and unnatural (possibly for the same reasons mentioned above). The dit seems to be the best basis for the speed one is perceiving they are keying, perhaps because the inter-element pause is also the same length, I don't know, but the answer probably lies in the anecdotal knowledge of experienced and fluent Morse and CW operators.

- I found that a 16-bit integer is just large enough to store the temporal resolution needed for the timing algorithm (assuming the timer is scaled properly), but an 8-bit integer is too coarse.

- When storing the Morse alphabet and the 4 non-letters in C struct bitfields using 5 bits, 2 of the possible 32 bits remain unused, as 00000 and 00001 will never occur, but they could used for control signals. The non-letters don't have to be stored, but I found that it simplified my code, saving program space.

- Even though I am storing the Morse alphabet in a typical, boring, "dense" memory structure, a sequential array, where context usually has no meaning, in this case its sequential order is context and has meaning, as the letters are stored in alphabetical order, which is key to easily deciphering it via ASCII. Some people have stored their Morse alphabets in more "sparse" structures, but this seems to add a level of complexity that erodes some of the efficiencies of any simple algorithm.

- While writing this article on this wiki of my own design, I noticed that the actual inventor of the Wiki, Ward Cunningham↗, is also a ham, and he and his friend Wayne wrote a Morse teacher↗ in assembly language on an 8-bit Intel 8048 microcontroller in 1978.

- What are the possible consequences of not knowing International Morse Code, even if you aren't a ham radio operator? Imagine if you or someone else were trapped and could hear someone tapping on the other side of a wall but could not hear their voice. How would you communicate with them quickly? It would be almost impossible to do so without previous knowledge of Morse code, as both parties would have to agree on some sort of numerical substitution cipher (e.g. A=1, B=2, etc.). It would take a lot of time for people to tap back and forth to determine that they are in agreement on a cipher, and then it would probably take a lot of time to communicate (Y would require 25 taps in a simple substitution cipher, for example.)